Agentic AI Is a Distributed System Wearing a Chat Mask

Why modern AI behaves more like microservices than chat - and what that means for reliability

For most of the past two years, the industry has talked about generative AI as if it were a better autocomplete. You type something. The model replies. That mental model was roughly correct when large language models were only used for drafting emails, summarizing documents or answering questions.

It is no longer correct.

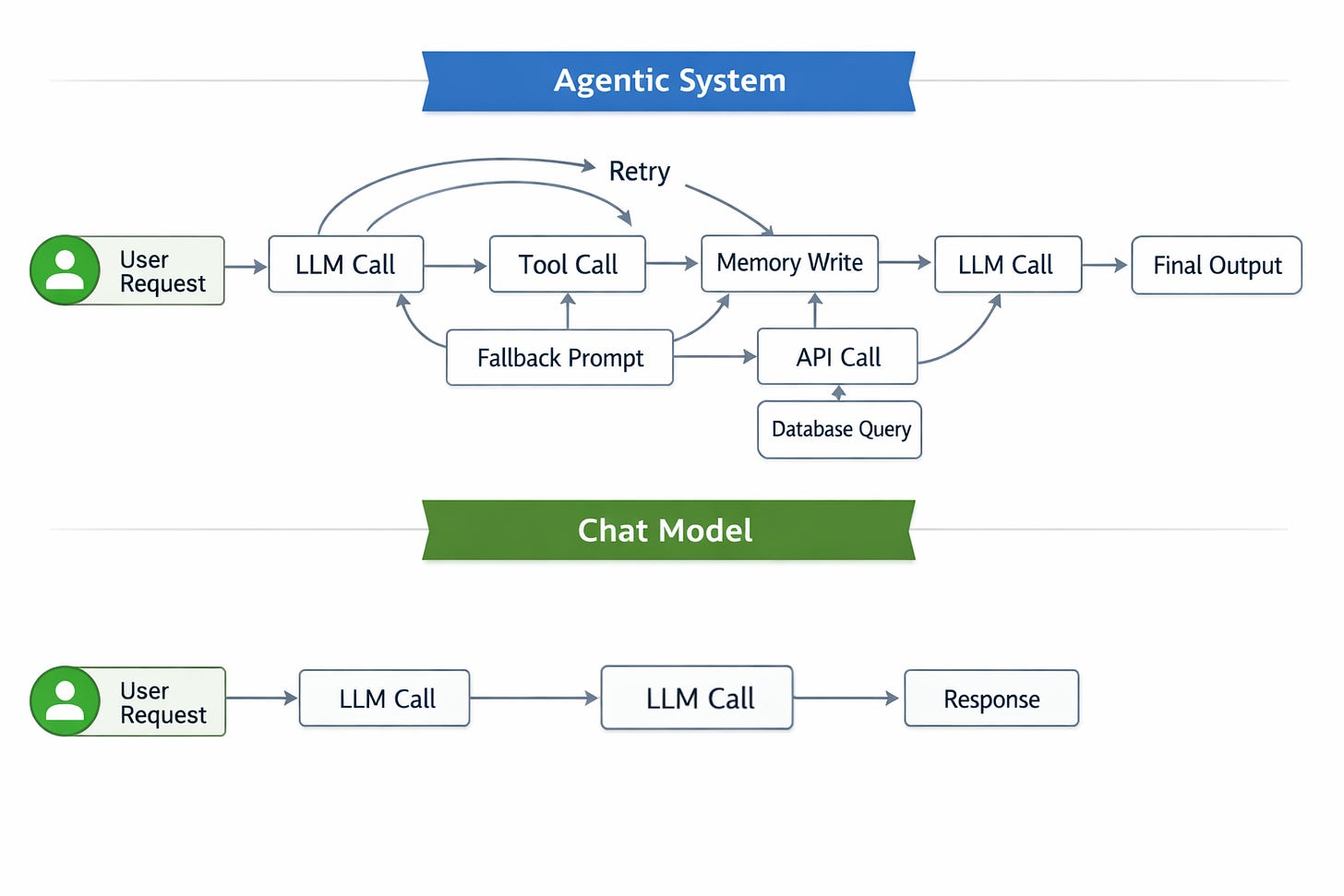

The moment you give a model the ability to call tools, store memory, retry failed steps and decide what to do next, you have crossed a boundary. You are no longer running a “model.” You are running a distributed system whose control logic happens to be probabilistic.

This is not a metaphor. It is an architectural fact.

An agentic system has all the properties of a distributed service:

Branching execution paths

Retries and backoffs

Partial failures

Fan-out to multiple dependencies

State that persists across requests

The only thing that is new is that the routing logic is learned rather than coded.

And that is why so many teams feel like their agents are unpredictable, expensive and impossible to debug. They are operating a distributed system with chat-era tooling.

Why two identical agent runs behave differently

One of the most common complaints about agents is that they “sometimes work and sometimes don’t.” The same prompt on Monday behaves differently on Tuesday. One run finishes cleanly; another gets stuck in a loop or explodes in cost.

That is not because the model suddenly forgot how to reason. It is because the system’s execution path changed.

In an agent stack, a single request may involve:

An initial LLM call

A decision about which tool to invoke

A call to a database or API

A follow-up LLM call with new context

A memory read or write

One or more fallbacks

Each of those steps can succeed, fail or branch differently. The model may see a slightly different context. A tool may time out and trigger a retry. A memory entry may be added or skipped.

When none of that is visible, the behavior looks random.

In distributed systems, this problem was solved decades ago with tracing, call graphs and state inspection. In agentic AI, we are pretending it does not exist.

Why prompt logs are no longer enough

Most GenAI stacks still log:

The user prompt

The model response

That is the equivalent of logging HTTP requests and responses without logging what your backend services did.

Imagine trying to debug a microservice outage with only:

“User called /checkout”

“User got 500 error”

You would have no idea:

Which service failed

Which dependency timed out

Which database query was slow

Which cache was stale

That is exactly what teams are doing with agents today.

When an agent fails, what you need is not the conversation. You need the execution:

Which tools were called?

With what inputs?

What did they return?

What context was passed forward?

What state changed?

That is the only way to know whether a failure came from the model, a tool, the data or the control flow.

Why this becomes a reliability issue

In any serious system, reliability is not just about success rates. It is about whether failures are understandable and fixable.

If an agent:

Loops infinitely

Calls the wrong API or

Corrupts its own memory

and you cannot reconstruct how that happened, you cannot prevent it from happening again. You can only tweak prompts and hope.

That is not engineering. It is a superstition.

Agentic AI does not need better prompts to become reliable. It needs the same things every distributed system needs:

Observability

State inspection

Execution traces

Control boundaries

Until those exist, agents will remain fragile and expensive.

Open question

When one of your agents misbehaves, can you reconstruct its full execution path step by step - or are you left guessing from a prompt and a final answer?

We’re FortifyRoot - the LLM Cost, Safety & Audit Control Layer for Production GenAI.

If you’re facing unpredictable LLM spend, safety risks or need auditability across GenAI workloads - we’d be glad to help.