AI Gateway vs Middleware: The Control Plane GenAI Actually Needs

Gateways Route Traffic. Middleware Ensures Trust

GenAI Is Entering the Reliability Era - And Gateways Aren’t Enough

Most enterprise GenAI deployments start with a straightforward architecture:

App → LLM API Gateway → Model Provider

It works… until it doesn’t.

Teams begin seeing:

Hallucinations creeping into user-visible features

Costs spiking without increased traffic

Compliance concerns surfacing from unpredictable outputs

Quality degrading over time despite no code changes

This is where AI Gateways hit their limits - and where the shift to AI Middleware (Control Plane) becomes inevitable.

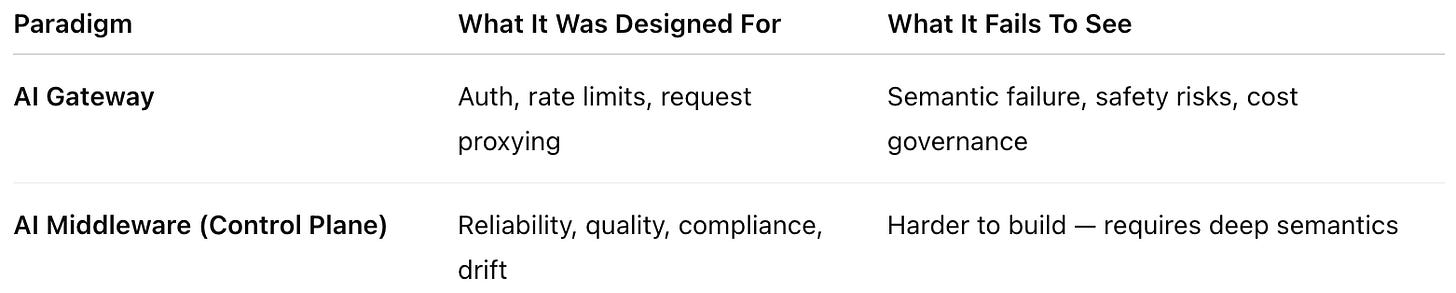

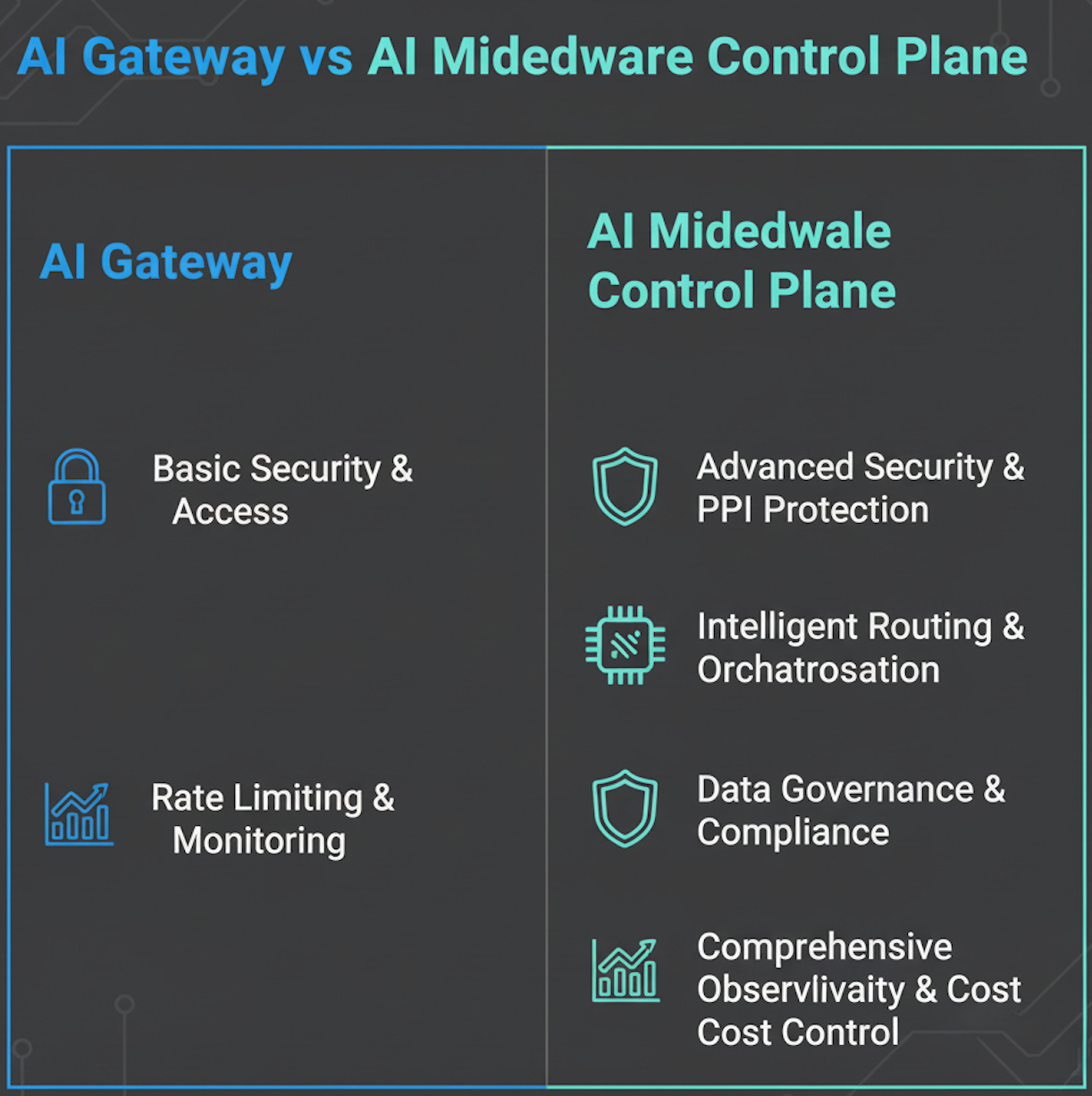

Two Philosophies Are Emerging in GenAI Infrastructure

Gateways keep the system running.

Middleware keeps the system right.

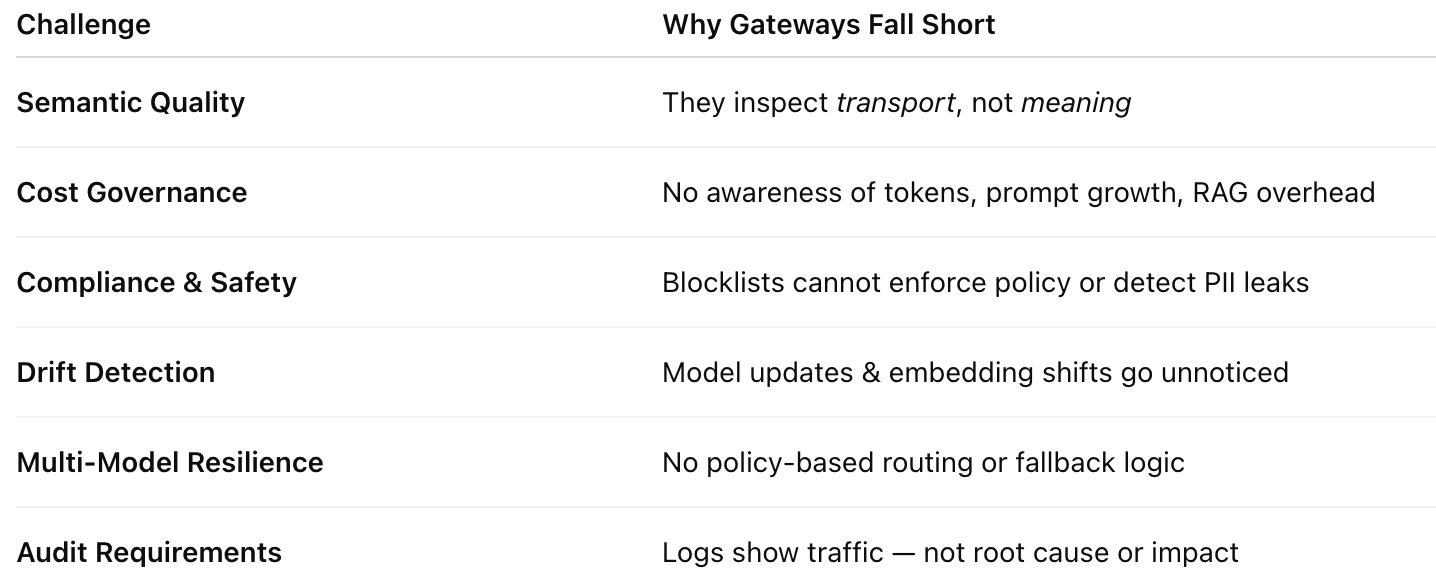

Why Gateways Can’t Handle GenAI Failure Modes

LLMs are not deterministic microservices.

Traditional gateway mindset breaks because:

Gateways Monitor:

200 OK status codes

latency

error rates

But GenAI Failure is:

Silent (no errors)

Semantic (nonsense with 200 OK)

Dynamic (model changes behind the scenes)

So while gateways stare at HTTP telemetry, what enterprises need to know is:

Did retrieval provide the right facts?

Did the model hallucinate?

Did output violate safety?

Has the model silently drifted?

Is this query costing 5x more today?

Gateways cannot answer those questions - by design.

Where AI Gateways Hit Hard Walls

Semantic failure is invisible to gateways.

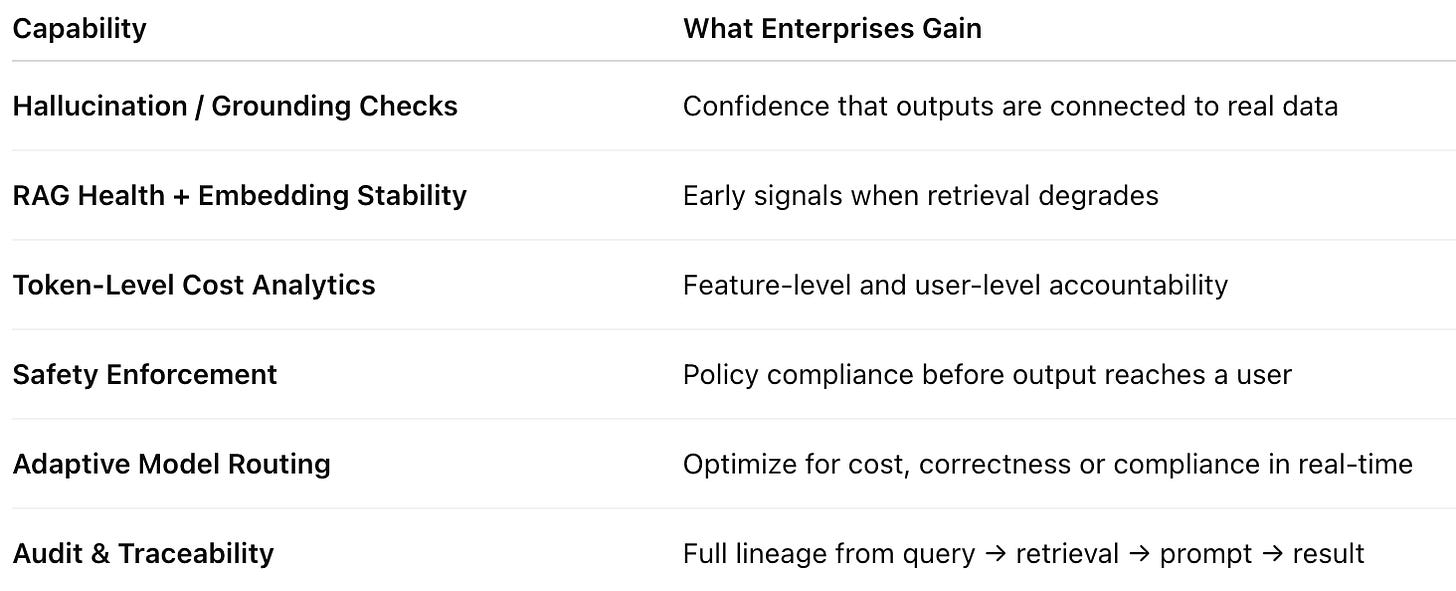

The AI Middleware Mindset: Control Over Meaning

AI Middleware introduces semantic observability + governance:

Middleware is not a proxy.

Middleware is a reliability system.

Where GenAI Can Break in the Real World

Retail support bot: Drifted tone → thousands of negative reviews

Healthcare assistant: Hallucinated diagnosis → compliance intervention

B2B SaaS: Context growth → 5x cost increase overnight

Finance workflows: PII exposure → SOC2 violation risk

LLMs don’t need to crash to cause catastrophe.

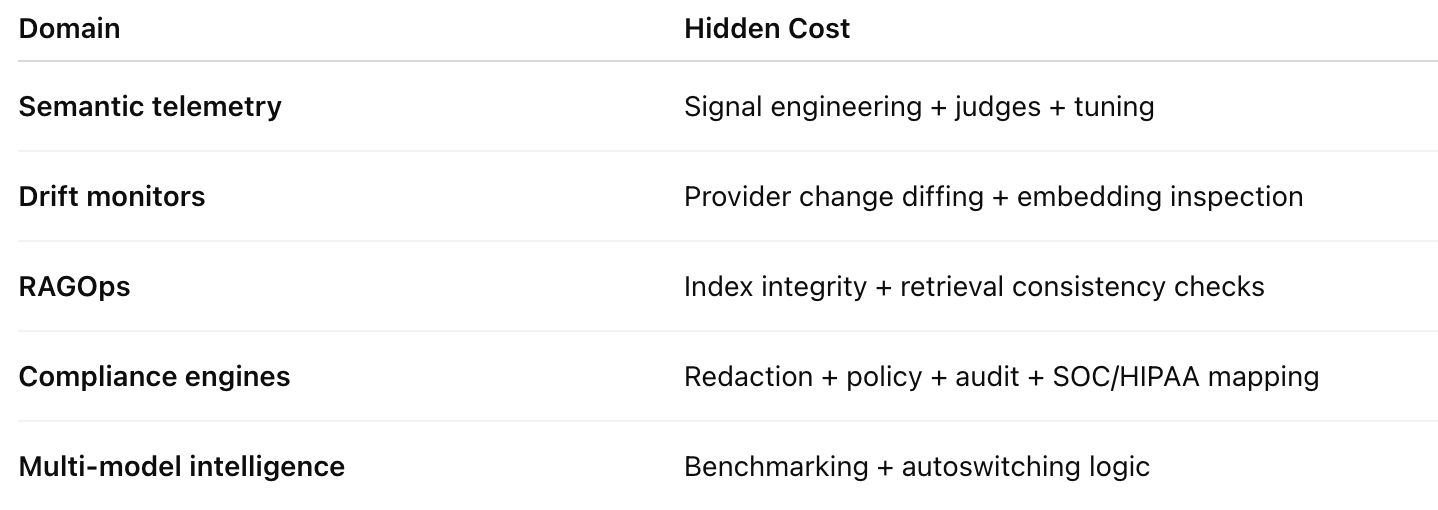

Can Enterprises Build Their Own Control Plane?

Short answer: Yes… but only after years of complexity:

Internal teams commonly underestimate this by 12-18 months of engineering effort.

By then?

Production incidents have already created risk.

The Coming Industry Split

AI Gateways will help you start.

AI Middleware will help you scale safely.

Gateways solve transport.

Middleware solves trust.

The difference determines whether GenAI becomes:

A production-critical system, or

A stalled prototype

Final Thought for Every Enterprise Leader

Do we want routing - or do we want control?

Because the organizations that master:

Semantic monitoring

Cost governance

Safety enforcement

Compliance-grade auditing

Adaptive routing

Drift resilience

…will be the ones who own the future of enterprise GenAI.

Who is going to build that control plane first?

We’re FortifyRoot - the LLM Cost, Safety & Audit Control Layer for Production GenAI.

If you’re facing unpredictable LLM spend, safety risks or need auditability across GenAI workloads - we’d be glad to help.

The "200 OK with nonsense" framing nails why traditional infra thinking fails here. Middleware as semantic observability makes sense but the real unlock is drift detection since LLM providers can change model behavior server-side without versioning, which means even deterministic prompts can start failing silently. That's a qualitatively different reliability problem than anyting web services ever faced.