LLM Cost Optimization Part 1: The Hidden Costs Behind the Hype

Why cost-efficiency is becoming the new frontier of GenAI infrastructure

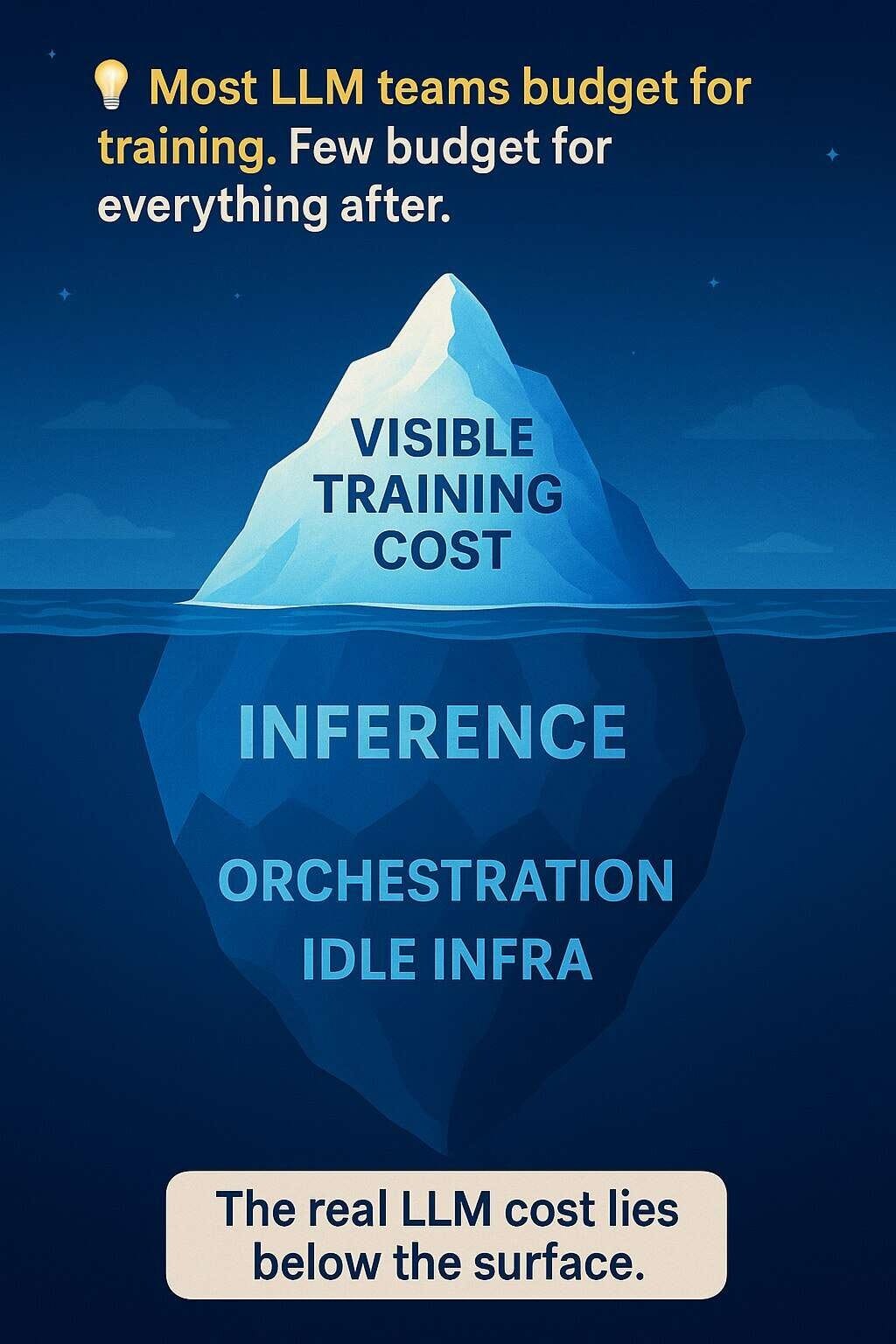

The Quiet Problem No One Budgets For

Between 2023 and 2025, enterprise teams everywhere rushed to embed large language models (LLMs) into production - chat assistants, RAG systems, summarisation bots. The excitement was justified. What most engineering leads discovered later was the unspoken cost curve.

Training GPT-4 reportedly exceeded $100 million and even Google’s Gemini family crossed $191 million in compute and energy (Cudo Compute). Yet those headline numbers hide a deeper story: for most Small and Medium-sized Enterprises(SMEs), it’s not the training that hurts - it’s inference and orchestration.

Even with inference prices dropping, Andreessen Horowitz notes a paradox: enterprise LLM bills are rising, not shrinking, because of inefficient prompts, static RAG pipelines and poor utilisation(a16z.com).

Anatomy of an Invisible Bill

What drives those runaway costs?

Token economics: Commercial APIs price by input + output tokens - every extra word in a prompt or long answer burns budget. Token calculators show cost spread from <$1 to $15 per million tokens depending on model and window size (OpenAI Pricing).

Model size obsession: Teams often default to “largest = best.” But empirical work like FrugalGPT proves cascading smaller models can cut spend by 98% with negligible accuracy loss.

Retrieval overhead: RAG pipelines feed documents wholesale into context windows. Every extra KB becomes tokens and costs.

Idle infrastructure: Self-hosting looks cheaper until utilisation drops. Dell’s 2025 analysis found 70 B-parameter inference on-prem could be 4.1x cheaper than API - but only at high steady load (Dell Technologies White Paper).

Scaling friction: Under-instrumented systems bleed GPU hours through low batching, poor routing and cold starts.

Why It Matters Now

For SMEs, this isn’t academic. The danger is a pilot that works technically but collapses financially once user traffic grows. A16Z’s 2024 LLM Cost Report quantified a 10x annual improvement in raw inference efficiency - yet most companies saw flat or rising total bills.

Meanwhile, NVIDIA’s benchmarking warns:

“Inference remains a significant challenge due to latency, cost and compute demands.” (NVIDIA Developer Blog)

In short: cheaper tokens ≠ cheaper systems. Without governance, cost per query drifts upward until scaling halts.

Industry Signals

Price compression wars. Chinese vendors cut API prices 50-88% in 2024 (Reuters).

Inference whales. Start-ups face customers whose token use skews heavily against averages (‘Inference whales’ are eating into AI coding startups’ business model).

Research focus shift. Alibaba’s End-to-End Optimization for Cost-Efficient LLMs pushes distillation and quantisation mainstream.

Lessons for Builders

Instrument from day one. Track tokens, cost per query and model tier.

Model-tier routing. Reserve top models only for complex or business-critical queries.

Prompt discipline. Trim verbosity; cache recurring system prompts and responses.

Monitor utilisation. Idle GPUs are silent cost multipliers.

Set KPIs. Define acceptable cost / latency / accuracy bands and revisit quarterly.

What the Next Phase Explores

Part 2 of this series will move from cost anatomy to engineering strategies - model tiering, prompt compaction, dynamic RAG routing and hybrid deployments. Expect real-world data and implementation guidance drawn from current FinOps and LLM Ops research.

We’re FortifyRoot - the LLM Cost, Safety & Audit Control Layer for Production GenAI.

If you’re facing unpredictable LLM spend, safety risks or need auditability across GenAI workloads - we’d be glad to help.