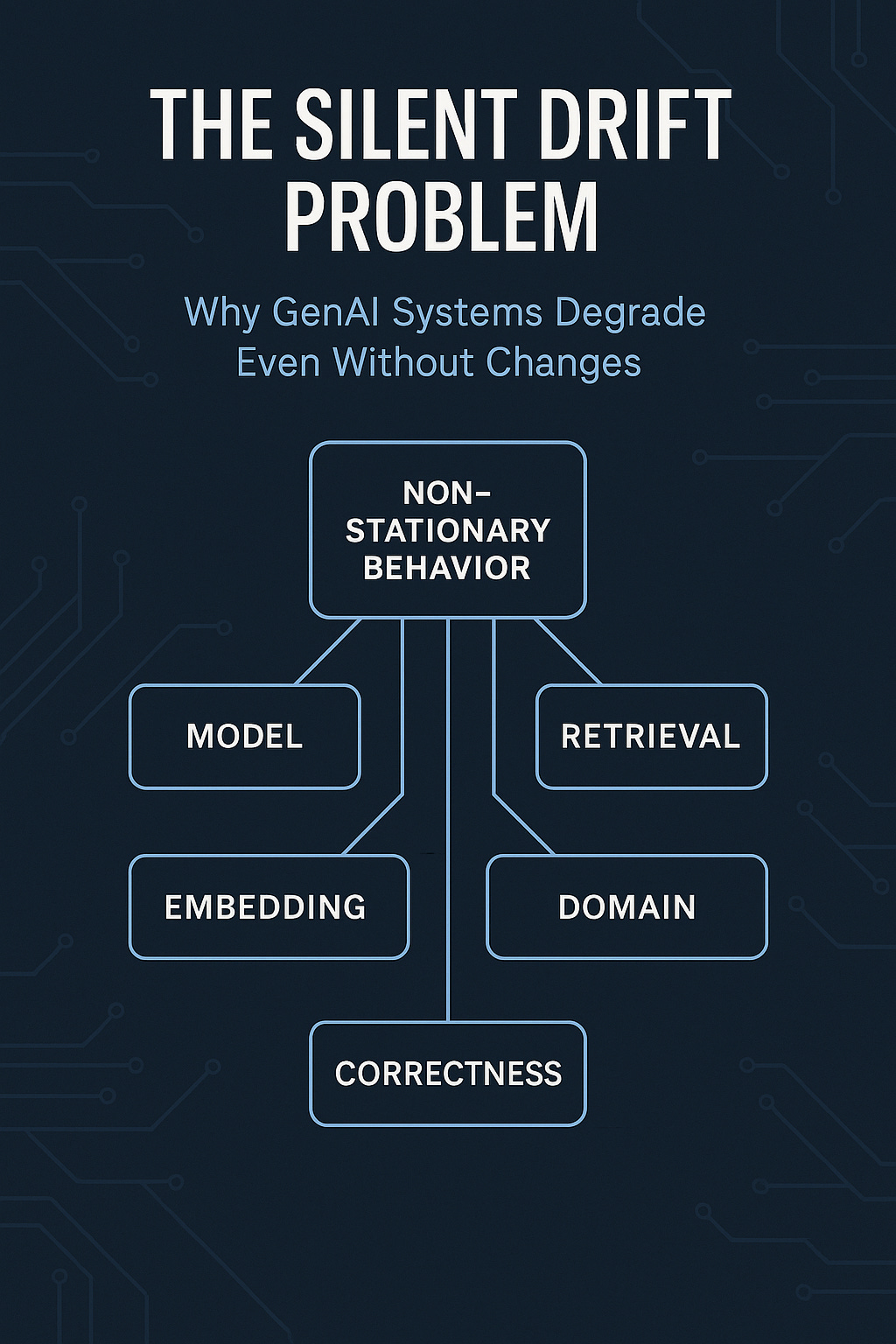

LLM Drift & Quality Decay Part 1: The Silent Drift Problem: Why GenAI Systems Degrade Even Without Changes

LLMs don’t fail loudly - they fail gradually.

GenAI systems don’t fail the way traditional software does.

They don’t throw 500s.

They don’t crash.

They don’t show memory leaks or CPU saturation.

Instead, they fail silently.

A model that produced crisp, grounded, helpful answers in January slowly becomes less accurate, less relevant, more generic or more cautious by March.

Teams notice subtle signals:

“This feels different from last month.”

“Why is retrieval pulling irrelevant chunks now?”

“Accuracy seems lower but nothing has changed.”

“Why is the model refusing safe instructions suddenly?”

This is the reality of LLM Drift - the most widespread, underdiagnosed failure mode in production GenAI systems today.

And the paradox is simple:

Nothing changed in your code.

But the system changed anyway.

This part explains the five root causes of drift, the symptoms that appear before decay becomes severe and why drift is not a bug - but a fundamental property of GenAI systems.

Later in this series:

Part 2 will detail how to engineer drift detection that catches issues before users complain.

Part 3 will introduce QRI (Quality Reliability Index) - the governance layer that turns quality into a trackable KPI.

The Drift Paradox: Why LLM Systems Change Even When You Don’t

In classical software, versioning, deployments and infra updates are fully controlled. If behavior changes, there’s a direct cause. LLMs break this mental model.

LLM pipelines change due to factors you do not control:

Provider-side model updates

Embedding model updates

Safety filter changes

Retrieval data evolution

Domain shifts

User-query distribution changes

Prompt variance buildup

RAG index aging

Context inflation

This creates the paradoxical experience:

“We touched nothing, but the output is different.”

Before diving into the mechanics, here’s the observation that matters:

LLMs are non-stationary systems.

Their behavior drifts over time, even with zero code changes. And for enterprises without evaluation pipelines, this drift accumulates unnoticed until it becomes a major outage.

The Five Primary Forms of LLM Drift

Drift is not one problem - it is a cluster of interconnected phenomena.

Below are the five types seen most consistently across SMEs.

1. Model Drift (Provider-Side Updates)

Model providers frequently:

Inference optimization

Adjust routing tiers

Modify sampling defaults

Patch safety layers

Alter attention constraints

Update prompt templates

Introduce new alignment rules

This causes shifts in:

Tone

Answer structure

Grounding behavior

Hallucination frequency

Refusal patterns

Output length

Latency

This is the most common drift type - and the hardest for enterprises to detect. Recent work has shown that even “versioned” LLMs can experience behavioral drift due to silent provider-side updates in routing, alignment layers and decoding defaults, leading to measurable semantic shifts over weeks (see Zheng et al., 2025).

2. Embedding Drift (Vector Space Shifts)

Embedding models change even more frequently than LLMs.

Updates to:

Tokenization

Vector normalization

Dimensionality

Underlying training data

Semantic clustering

….cause your entire vector index to drift relative to new embeddings. Studies(like Liu et al., 2025) have found that these drift break retrieval consistency.

RAG pipelines suffer heavily from this: Old vectors ≠ new vectors. Even slight changes break retrieval alignment.

3. Retrieval Drift (Aging Knowledge Corpus)

Retrieval quality degrades due to:

Outdated documents

Metadata misalignment

Chunk-level topic drift

Incorrect prioritization

Index bloat

Domain-document distribution shift

When retrieval decays, the LLM’s quality decays even if the LLM itself is stable.

Retrieval Drift is the #1 cause of “mysterious hallucinations” in enterprises.

4. Domain Drift (The World Evolves, The Model Doesn’t)

LLMs freeze at training time.

Meanwhile, your world changes:

Product features

Pricing

Compliance rules

Organizational structure

Customer terminology

Regulatory environment

Market vocabulary

This creates a widening gap between what the model believes and what your business now requires.

5. Safety & Alignment Drift

Safety-guideline changes can cause:

Sudden refusals

Over-cautious tone

Unnecessary disclaimers

Hallucinated safety messages

Blocked harmless queries

This happens because alignment layers evolve in the provider stack.

Why Drift Matters More in Some Sectors

Drift affects every GenAI deployment - but it is mission-critical in certain industries due to regulatory exposure, financial risk, or user trust.

A short, non-exhaustive overview:

Fintech & Lending

Drift in summarization or decision-support leads to:

Inconsistent recommendation tone

Hallucinated financial advice

Missing disclaimers

Mismatched thresholds

Accuracy and stability are legally sensitive.

Healthcare & MedTech

Drift influences symptoms classification, medical summarization and clinical Q&A. Even a small % drop in grounding can have clinical risk.

HRTech & Recruiting

Drift affects summarization, candidate scoring and policy alignment. Bias can unintentionally increase or decrease over time.

Customer Support Platforms

Drift leads to:

Incorrect troubleshooting steps

Missing context

Outdated product knowledge

Wrong escalation paths

This hurts CSAT(Customer Satisfaction Score) and churn.

LegalTech & Compliance Automation

Grounding drift or safety drift creates:

Misinterpreted policies

Hallucinated legal interpretations

Compliance violations

High-stakes domain.

SaaS Platforms Integrating GenAI

Drift directly impacts product reliability, onboarding and automation quality.

GenAI drift is universal - but for these sectors, it’s existentially critical. This trilogy gives you tools to manage it.

Early Warning Signals of Drift

Like structural cracks in a bridge, drift presents subtle symptoms before failure.

Teams should watch for:

Grounding Score Drop: Output becomes less aligned to retrieved evidence.

Retrieval Overlap Decline: Same query → different chunks.

Embedding Distance Shift: New embeddings diverge significantly from historical vectors.

Increase in Refusals: Safety drift causing unintentional over-blocking.

Output Tone Variability: AI stops sounding like the same assistant.

“Overconfident Wrong Answers”: A spike in confident hallucinations is a major drift signal.

User Complaints: When users notice drift, it’s already severe.

Why Drift Is Inevitable

Drift is not a preventable bug. It is a fundamental property of:

Non-deterministic models

Provider-side updates

Shifting context windows

Evolving knowledge corpora

Changing safety layers

Domain volatility

This phenomenon aligns with recent findings on the “half-life of truth” in LLMs, where factual grounding decays over time due to semantic drift, retrieval misalignment and recursive generation instability (see Sharma et al., 2025).

Which means:

At present, you cannot prevent drift. You can only detect and govern it.

What’s Next in This Series

In Part 2, we’ll move from problem exposure to engineering practice:

Grounding monitors

Retrieval consistency checks

Embedding stability testing

Golden-set evaluation

Canary queries

Drift thresholds

Drift Engine reference architecture

We’re FortifyRoot - the LLM Cost, Safety & Audit Control Layer for Production GenAI.

If you’re facing unpredictable LLM spend, safety risks or need auditability across GenAI workloads - we’d be glad to help.