The Hidden Complexity of Scaling GenAI Systems Part 3: AI Reliability Governance: Turning Performance and Trust into KPIs

Why Reliability Needs Governance

In Part 1 we saw that fragility precedes cost and Part 2 that observability reveals what’s breaking. But visibility alone isn’t governance - it’s diagnosis.

Without accountability loops, metrics stay in dashboards instead of boardrooms. Most SMEs lack a defined reliability owner, leaving uptime and cost variance unmanaged.

The Shift from FinOps to Reliability Ops

Traditional FinOps asks: “How much did we spend?”

Reliability Ops asks: “Was it worth it?”

A team that focuses only on token savings might miss the fact that system uptime or latency deteriorated. Governance aligns both sides - cost control and performance quality - into a single operating discipline.

Introducing the AI Reliability Index (ARI)

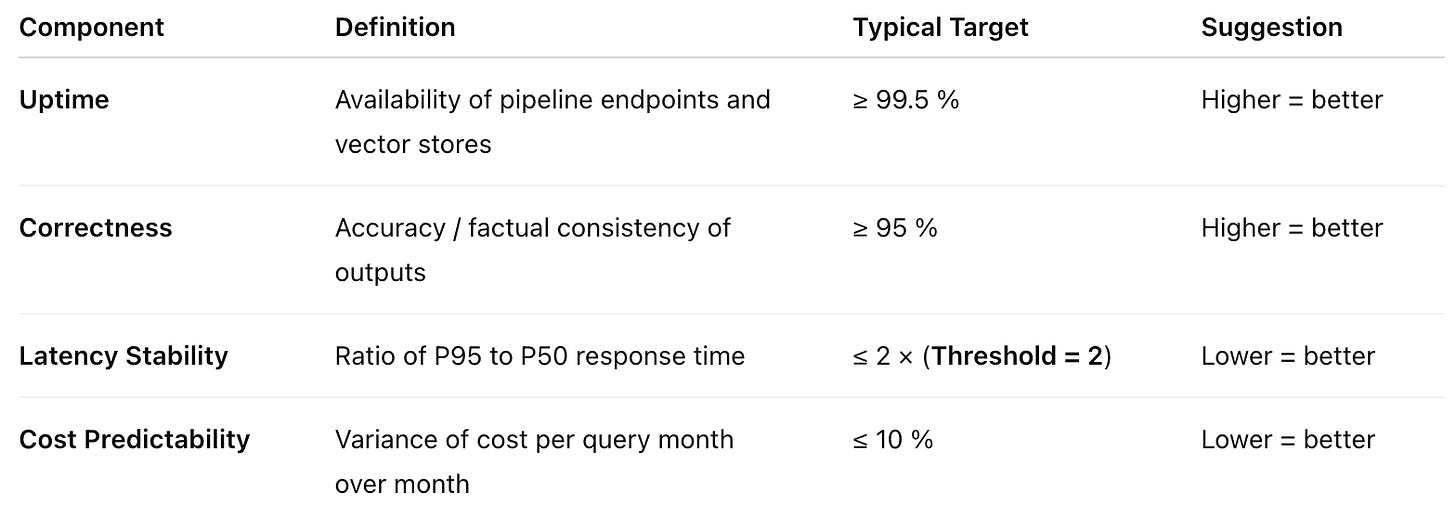

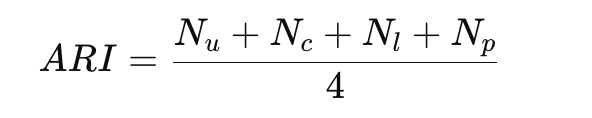

To make reliability quantifiable, FortifyRoot defines a composite index that averages four normalized sub-metrics — ensuring each factor (uptime, correctness, latency stability, and cost predictability) contributes equally to an overall score between 0 and 1.

Component Overview

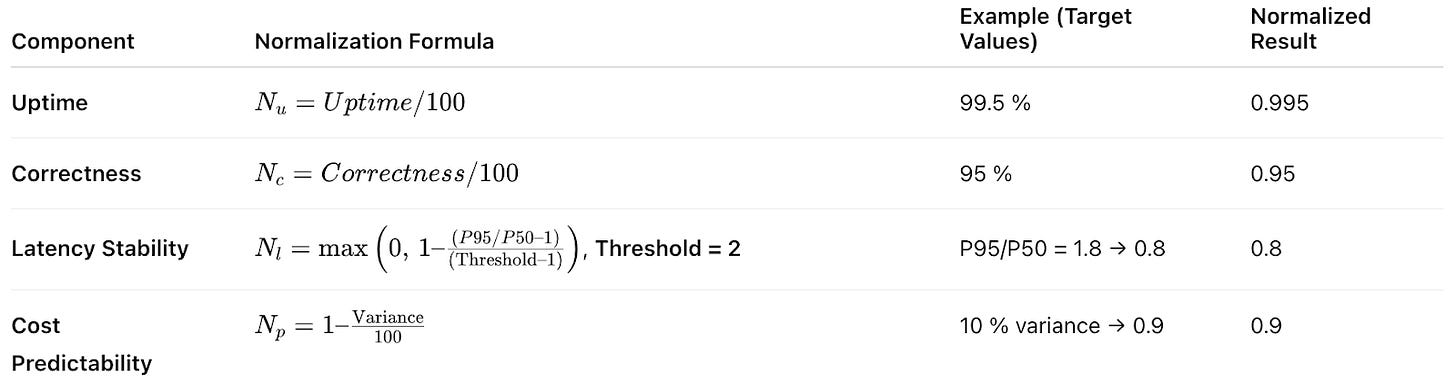

Normalization Formulas

Values beyond the latency threshold are capped at 0 to prevent negative normalization.

Example Calculation

Substituting values:

ARI = (0.995 + 0.95 + 0.8 + 0.9)/4 = 0.911 ≈ 0.91

✅ Result: ARI ≈ 0.91 → Production-grade reliability

While ARI uses equal weight for all four metrics, different enterprises value reliability dimensions differently. A capable LLMOps platform should allow teams to assign custom weightage or extend ARI with business KPIs - for instance, prioritising correctness over latency in regulated domains or cost predictability over uptime for batch workloads. This flexibility ensures reliability metrics align with each organisation’s real definition of “production-grade” performance.

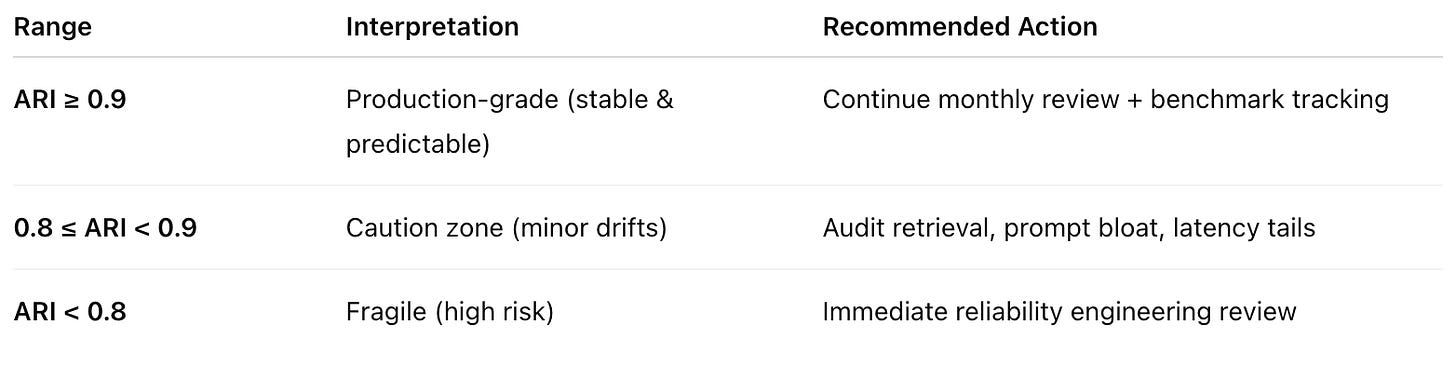

Interpretation Guide

Reliability Governance Loop

Measure: Collect telemetry on uptime, correctness, latency and cost.

Review: Cross-functional monthly review with AI lead, product owner and finance.

Optimise: Prioritise root causes (prompt inflation, index bloat, model-tier misuse).

Educate: Share insights in engineering retros to create a learning culture.

Governance turns metrics into rituals and rituals into discipline.

Governance Checklist

✅ Assign KPI owners (Uptime → Infra Lead, Correctness → Data Scientist, Cost → FinOps).

✅ Track ARI weekly by publishing trend charts; flag if ARI < 0.85 for two consecutive weeks or drops > 5% month-on-month.

✅ Alert if latency stability > 2x baseline or token variance > 20%.

✅ Include ARI score in executive dashboards and board reviews.

✅ Conduct quarterly audit of data privacy and security settings.

✅ Reward teams that improve reliability without extra spend.

Embedding Reliability as Culture

Governance is not compliance - it’s a habit. When engineers see reliability metrics in daily dashboards, and leaders review them with financial KPIs, trust emerges organically.

Enterprises can begin with simple habits:

Include reliability targets in OKRs.

Publish a live incident log with a running counter of total incidents and days since the last incident on internal dashboards.

Hold cross-team “AI post-mortems” after each incident.

Over time, these create a culture where AI systems are treated like critical infrastructure - because they are.

Key Takeaways

Reliability must be governed, not assumed.

The AI Reliability Index (ARI) quantifies trust and performance together.

Governance loops transform metrics into action.

Enterprises that treat reliability as a product feature build durable AI foundations.

We’re FortifyRoot - the LLM Cost, Safety & Audit Control Layer for Production GenAI.

If you’re facing unpredictable LLM spend, safety risks or need auditability across GenAI workloads - we’d be glad to help.

Excellent analysis! I found the ARI concept super insighful. Could you elaborate a bit more on how capping values at 0 for latency threshholds prevent negative normalization? So smart.